RipeNow

A Produce Selection Solution to Inspire Confidence in Everyday Shoppers

An Overview

The Logistics

- Project for HCI Research Methods-- August 2017 - December 2017

- Teammates: Ethan Graves, Maria Wong, Cooper Colglazier

- IRB approved project

My Role

- UX Design and UX Research on a team with 3 other UX Designers and Researchers

- Created Storyboard artifact for summarizing preliminary qualitative research

- Facilitated problem statements prioritization based on my prior experience with The Home Depot

- Designed initial lo-fidelity wireframes for the mobile application solution

- Contributed to the two final prototypes using Sketch as a tool

My Biggest Takeaway

Not every solution can be or should be solved with solely a screen interface. Instead the root problem should be addressed first, and by addressing the problem in conjunction with user needs the medium becomes apparent.

Research

Preliminary Interviews

In order to diverge in the problem space, we conducted 8 semi-structured interviews around the experience of buying produce. Since produce shopping is such an everyday task, we chose not to limit our initial interviews, this way we could later target specific prioritized activities whose impacts could be far-reaching.

We based our initial interview questions around three categories:

From these areas of interests a number of pain-points began to arise, primarily regarding the self-checkout procedure with produce, and confidence in selection. Users mentioned things like: “I won’t go through a self checkout if I know I’m buying produce,” and even “I never buy pineapple because I don’t know how to tell if it’s ripe”

Contextual Inquiries

While discussing the problems with peers, they raised the concern that since produce shopping is so habitual, users may not be able to relay their processes. To combat not only this problem but to validate our initial findings, our team conducted 7 Contextual Inquiries.

Each user was told to select their typical grocery items. In our initial interviews we found a trend of our users mentioning they buy the same groceries every week. We decided to replicate that behavior with our Contextual Inquiry. While our users were making their selections we questioned their actions and motives.

One user in particular picked up a lemon and smelled it. He quickly put it in the cart without much thought. When asked why he smelled the lemon his response was “I’m not sure, isn’t that what you are supposed to do?” It was becoming clear that our users had an understanding of how to select produce, but really didn’t know why they did it, or if it even worked.

We also asked our users to follow a recipe that included some obscure vegetables. One user was selecting a red onion, but she had never purchased a red onion before. Since she lacked the domain knowledge she relied completely on the photo used in the recipe to select the onion of choice. She even mentioned that the real onions didn’t look a lot like the photos. When asked about her confidence with her selection, she replied “honestly I just guessed.”

In order to synthesize the contextual inquiries we created an affinity map. In order to notice patterns, and high level problems. We later came back to this large affinity map when creating problem statements.

Our contextual inquiries gave us a chance to see our users within their environment. We found our users often were confident in selecting very specific fruits and vegetables, the ones they bought most often. But when faced at purchasing a new or unusual fruit they lacked the skills or knowledge to make an informed decision.

Storyboarding the Contextual Inquiries and Interviews

Quantifying the Space

Surveying our Users

Our team understands that while grocery shopping is a ubiquitous occurrence, it is also regionally influenced. Therefore, In order to validate our local results from the preliminary interviews and the Contextual Interviews we released a survey online. Our survey had more focus than the preliminary interviews as we were really interested in our users habits, and their mental model of grocery stores. Likewise we were interested in understanding the demographic makeup of our users, and if that makeup correlated to specific shopping habits (perhaps a learned behavior).

We distributed our survey on Reddit and through our personal social medias, hoping for snowball sampling. We later conducted the survey analysis with the Qualtrics tools (survey platform), Excel, and Tableau. In total we had 22 questions spanning the categories of:

- How aware our shopper are of their grocery bill total while shopping

- Where are users shop

- If they grocery shop online

- And, we had our users rank what factors affected what produce they purchased

In total we received 151 responses from around the united states. We found a trend that answers changed with the age demographic of our users (e.g. total bill became less of a concern with increasing age). Age later became the differentiating factor that allowed us to build our personas.

From both our survey and Contextual Inquiry we found a clear trend of our users wanting more information regarding “what” they were buying in the stores. While checking out was a pain point, it surfaced more as an annoyance than total confusion.

Prioritizing Pain Points

Generating Personas

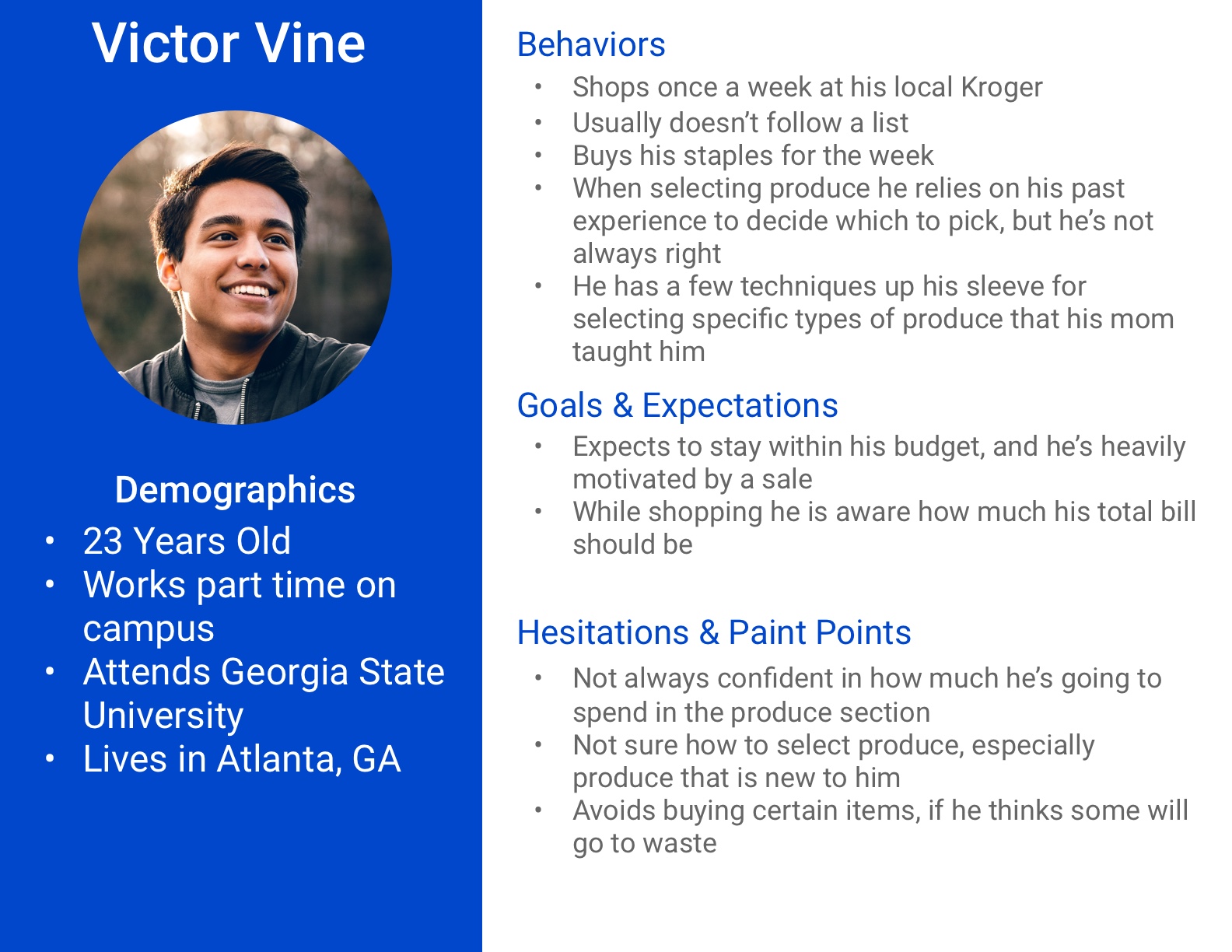

In order to empathize with our users, and differentiate their needs we created two personas: The Aware Shopper, and the Experienced Shopper. We used data gathered from the surveys, and our contextual inquiries to build out these personas. We used these personas to create problem statements from their perspective. Example: As an Aware Shopper I want to know how much I’m going to spend, so I stay within my budget.

Creating Problem Statements

Based on both our personas and our affinity map from the contextual inquiries we were able to create 16 problem statements.

Aware Shoppers:

- As an Aware Shopper, I use packaging to gauge quality, and convenience.

- As an Aware Shopper, I will compare quantity with price versus how much I need, so I can get the best deal.

- As an Aware Shopper I want to know how much I’m going to spend, so I stay within my budget.

- As an Aware Shopper, I wish I could keep track of how much I use or how long produce lasts before going bad, so that I know how much to buy.

- As an Aware Shopper, I have yet to develop reliable techniques for identifying product I need, so I can buy the best product.

Experienced Shoppers:

- An as Experienced Shopper, I am conscious of packing so that I am not wasteful.

- As an Experienced Shopper, I know how much I use, so I always buy the same not to waste.

- As an Experienced Shopper, I will compare price and quantity to get enough of what I need for the best price.

Both:

- As an Aware Shopper and an Experienced Shopper sometimes I like to browse and sometimes I like to go directly, so I can find my items.

- As an Aware Shopper and an Experienced Shopper, I keep stock of my staples, so I have enough food I like at home.

- As an Aware Shopper and an Experienced Shopper, I use information I’ve acquired passively to ensure I am choosing the best produce for me.

- As an Aware Shopper and Experienced Shopper, I don’t know how to select product that is new to me, so I can make an educated choice.

- As an Aware Shopper and Experienced Shopper, I am overwhelmed by the options of items I am unfamiliar with, but I want to make the best choice.

- As an Aware Shopper and Experienced Shopper, I need things that are easy to prepare, so I can get onto more important things.

- As an Aware Shopper and Experienced Shopper, I need an objective concrete method to gauge ripeness so I don’t feel like I’m guessing.

- As an Aware Shopper and Experienced Shopper, I need to be aware or have the information to make changes on the spot in order to complete my plan.

Prioritizing Problem Statements

To narrow our focus and ensure we were solving the largest pain points for our user we prioritized the problem statements on a 2x2 matrix. This method allowed us to compare the each problems user need, and frustration. In the end we had a visual of our users top pain points we could tackle.

Problem statements that fell within the top right quadrant (high frustration and high user need) became our top priority. Likewise the 2 stickies that fell in the top left quadrant we felt needed to be upheld for our users, since they were high user need and currently a low frustration. With this in mind our top problem statements to address were:

- As an Aware Shopper I want to know how much I’m going to spend, so I stay within my budget.

- As an Aware Shopper, I wish I could keep track of how much I use or how long produce lasts before going bad, so that I know how much to buy.

- As an Aware Shopper and Experienced Shopper, I need to be aware or have the information to make changes on the spot in order to complete my plan.

- As an Aware Shopper and Experienced Shopper, I need an objective concrete method to gauge ripeness so I don’t feel like I’m guessing.

- As an Aware Shopper, I have yet to develop reliable techniques for identifying product I need, so I can buy the best product.

- As an Aware Shopper, I will compare quantity with price versus how much I need, so I can get the best deal.

Design

Ideation Session

Once we had focus on which problems to solve we could allow ourselves to create solutions. We were very conscious of allowing any idea to surface, no matter how “crazy it seemed.” Each team member ideated individually on sticky notes keeping our problem statements in mind. We then put those stickies on the board grouping similar ideas, and expanding on each others. In the end we left our ideation session with four ideas.

Multi-User Kiosk

- Provides selection information

- End of aisle, semi-circular

- Display Ads to encourage store usage

- Scale is determined by usage, and store space

- Displays an item, shows information about item (ripeness, origin, stock)

- Create grocery lists

- Prints a grocery list on receipt paper for user

Dynamic Physical Model

- Fixed on cart (but could be on rails per aisle)

- Physical Model that changes firmness dynamically

- Ipad allows users to see details about produce

- Physical model serves as reference when selecting a specific item

- Dynamic signage, encourage use in grocery stores, cut down on labor cost of printing signs

Hypercam (imaging software that scans fruit)

- Based on the paper: HyperCam: Hyperspectral Imaging for Ubiquitous Computing Applications

- On rails in store to cut down on store investment

- Scan multiple at the same time

- Enter information

- Maybe point to the right one?

Mobile Application that has information on gauging ripeness, functions as a shopping list

- Saved Items (staples)

- Seasonal Information

- Shopper Profile Setup

- Add recipes to the list

- Keeps track of totals

- Technique Symbols

- Detailed info for ripeness

- Card layout for ripeness

Concept Testing

After iterating on these basic ideas as a group, we individually create lo-fidelity prototypes of these four solutions. We then tested the four solutions with 4 participants. We had the user try each solution and give their opinion on the system. This was relatively informal as our prototypes were at varying fidelities, and we instead focused on the conceptual model of the system.

Through testing with our four users, below are some of the common findings, which ultimately shaped which solution to move forward with.

Multi-User Kiosk Findings

.jpg)

- Users generally responded with an interest in the system, specifically the feature of printing a receipt with ingredients

- A few users raised the concern that when people are in stores they generally know what they are looking for and may not interact with the kiosk to look up new things

- Sorting keyboard was not well received by participants

- User raised the concern that typing in to search only worked if the user knew what they were searching for, which prohibits the explorative intentions of the kiosk

Overall our users were unsure of the practicality of using this regularly in a grocery store, besides the novel nature of the system.

Dynamic Physical Model Findings

- confused by the order of actions, unsure when to use the physical model and when to pick up the real item

- struggled to compare the two items, since one was a simulation and the actual item, mentioned different techniques they used to squeeze an avocado versus something like a lemon

- most users compared the colors presented on the interface rather than interacting with the physical elements

- users raised hygiene concerns of the shared touched system

Overall the functionality asked too much of the user, currently they might compare one or two items against each other. We asked them to compare a fake item to a real item, which doesn’t actually provide an efficient solution just an alternative to the current methods.

Hypercam Findings

- Drawn to the ease of use

- Questioned how it worked,

- Concerned with its scaling

Overall users were very pleased with the system. One user used Hypercam first, and then the physical model only to remark “I thought it would pick out my produce for me like the other [system]”

Mobile Application Findings

- Confused about the purpose of many of the functions

- Core purpose was lost, some users thought it was a recipe database app

- Main functionality of the redesigned grocery list, to be a detail breakdown, was lost on users

- Users questioned how to flip the cards (could be a fidelity issue)

- Generally understood the application flow and the use case, but were reluctant to say they would use it every time they shopped

Overall users were impartial about the solution. It was a mobile application, and all of our users were familiar with the idea and the interactions. It asked the user to front load a lot of their work, and provided them with too many features. In other words, we lost focus of our users actual paint points and solved problems they didn’t have.

Usability Testing Hypercam

Refining the Hypercam

Based on the results of our first user tests we decided to move forward with our Hyper Camera solution. We applied some of the feedback we received in the first informal session, and conducted another set of more formal user testing with a new group of users.

In our first tests users had commented on using the blemishes and aesthetic signifiers of the apples as tells as which to select (in addition to the on screen cue). In order to limit our variables, as we were mainly interested in the spatial mapping from on-screen to the physical world we tested with Kiwis (a fruit with significantly less variations, and one that isn’t completely familiar to our users).

Our insights from this set of testing, were very interesting. Participants were counting from the edges of the display to locate the selected fruit. Therefore, we ran in to the same problem of wondering if it was scalable. We also received a lot of positive feedback in terms of trusting the system (even though it was a medium fidelity prototype). Finally our users mentioned a few times it would be helpful to have their hand as a reference (e.g. a live video) when reaching out to select their fruit.

We took this into consideration when iterating on the design once again.

A/B Within Subjects Formal Usability Test

In our third test we were mainly concerned with testing if our users could grasp the spatial reasoning aspect of the design. We felt unclear if the results from the two prior tests were reliable.

We implemented a live video feature into the prototype. We wanted to test if our users used this dynamic playback to watch their hand on the display to aid them in locating the produce.

We also considered the fact that our users were counting from the edge of the display. Knowing this would become harder as the displays scaled up we were curious if we could introduce more landmarks into our design and see a similar result.

So, for our third round of user testing we built an additional rig, this time with pegs distributed throughout. We also built a camera rig to hold a GoPro. We collaboratively figured out a way to livestream a video with a GoPro to a laptop, overlay the selection on to that screen, and have each screen of the interface on a seperate Mac desktop. This was a hodgepodge solution, and reminiscent of something you would read about in Jake Knapp’s Sprint book, but it served well in testing.

Also just a note, we switched to plastic playpen balls. This was a cost saving measure, as well as a way to limit our variables. [Lesson Learned: It did the opposite, our users used the patterns made by the different colors of the balls as indicators which to choose.]

In order to test our two variations in designs (with and without pegs). We used a within subjects A/B test design. This allowed our users to talk about both experiences and compare. In addition to the standard debrief with the usability test we also conducted a product reaction card activity for each prototype. This allowed our users to describe their experience with the two systems with a limited vocabulary which later served as the starting point of the debrief discussion. At the very end of the test we had our users take the SUS test in order to quantify the test and system as whole.

Results of Formal Usability Testing

We were able to test with 7 participants, and each used our two prototypes. Our users conceptually understood the system, its use cases, and its functionality.

One user even commented it reminded him of a checkout system, “you use it once and it becomes second-nature”. Likewise, our participants utilized the live feedback of the system consistently. They readily used their hand as a guide for double checking if they had selected the indicated item.

When comparing the two versions of the prototype we had inconsistent results. About half of the users we tested commented that the pegs in the prototype helped them select their item. The other half mentioned not noticing the pegs, or relying completely on the live feedback.

Interesting feedback we received was on the system visibility, and trust of the system as a whole. A few participants mentioned they would prefer to know how the system was working as a vote of confidence for the systems credibility. While other participants immediately trusted the system, and when asked about their trust made comments like “there is no reason not to trust it.” This could be due to both the testing environments, and the predominantly technical backgrounds our users had. Regardless moving forward we would implement some sort of system indication, or clear signifier as to how the system works.

Cognitive Walkthrough

In addition to facilitating user tests with our two rigs, we also had our peers perform cognitive walkthroughs. We were curious about the learnability of the system. This experience is similar to an ATM in the sense our user needs to approach the system, quickly understand what is happening and move along. Likewise, it was one of our problem statements not to interfere with their routine shopping habits. The cognitive walkthrough allowed us to roughly estimate the cognitive load of each of our steps.

Results of Cognitive Walkthroughs

Our results of the cognitive walkthrough were positive. All of our participants mentioned how short and accurate the interaction with the interface was. The information was clearly presented and straight forward.

The only concerns our participants had concerned retrieving the selected item. They mentioned accessibility problems, and overall system trust. One participant mentioned that if the system was ever wrong, that is if someone got home and didn’t like their produce, it would never be used again. Therefore they suggested be mindful of the language, and not claiming to be 100% accurate. Overall the cognitive walkthroughs echoed a lot of what we had been hearing during the user testing.

The Solution

The Final User Flow

Looking Ahead

While reporting out to a group of industry professionals one raised the concern: you have built a system where people can trust you are selecting ripe fruits, now you need to ensure your definition of ripe and the user's definition of ripe matches. In other words, we need to ensure our system matches our users preexisting mental model of ripeness. This is something we would need to test in the near future to ensure our system can match our users expectations.

Likewise, moving forward, we would scale the project to a grocery store size. A lot of our tests revolved around the spatial reasoning required to grab the indicated item. Even after 3 sets of tests we cannot say with complete confidence how easy this task is to accomplish. Therefore, it is only fair to say the system must be scaled when considering the overall feasibility and likelihood of a similar system being implemented.